A few years ago, Casey Harrell was diagnosed with a devastating brain disease. Known as amyotrophic lateral sclerosis, or ALS, it left him paralyzed and unable to speak. But last year, a doctor implanted tiny electrodes in the speech center of Harrell’s brain. They pick up brainwaves that a computer can convert into words. Today, the 46-year-old man can once again converse.

Using recordings of his voice from before his disease, scientists were able to train an artificial intelligence model. With its help, Harrell now “speaks” through an electronic voice synthesizer. And thanks to that AI, it sounds similar to the natural voice he once had.

“One of the things that people with my disease suffer from is isolation and depression,” Harrell says, using the new technology. These individuals don’t feel like they matter anymore, he says. But thanks to this tech, he and others like him might be able to actively participate in society again.

Let’s learn about mind reading

This new technology offers “by far the most accurate speech-decoding ever described,” says David Brandman. He’s the neurosurgeon with the University of California, Davis Health who implanted the devices in Harrell’s brain. His team shared details of how the tech works in the August 14 New England Journal of Medicine.

This technology isn’t a mind reader, Brandman emphasizes. It can’t listen to someone’s secret thoughts. It only works when the user wants it to — when they are trying to speak.

“There are … thousands of people in the U.S. right now who want to talk, but can’t,” says Brandman. “They are trapped in their own bodies.”

One day, this technology might help many of them get their voice back.

The tech behind it all

Electrical signals from the brain travel along neurons to control every movement in the body. Different parts of the brain turn on each time you wave your hand, run or smile. Those electrical signals travel down pathways to activate the muscles you want to use.

But injury or disease can damage parts of those neural pathways. This can keep the electrical signals from reaching the muscles needed to move or speak.

Explainer: What is a neuron?

Sergey Stavisky is a neuroscientist at UC Davis. Together, he and Brandman run a neuroprosthetics lab to restore abilities people have lost due to brain injury and disease. Since 2021, their team has been working to restore speech through technology known as brain-computer interfaces, or BCIs.

BCIs use implants to tap into brain signals. A computer learns to interpret what the brain signals mean. Later, it can translate those signals into machine motion. It’s how a person can control a robotic arm using their thoughts.

Explainer: How to read brain activity

Their work is part of a long-running study known as BrainGate. It tests how safe the implants are in people who have become paralyzed. It also works to improve such devices so that they can help people regain better control of their environment.

Harrell decided to take part.

This penny offers some perspective on the size of the microelectrode array (left) that gets implanted into a patient’s brain. Its pointy square array is about 3 millimeters across.UC Davis Health

Translating brain signals into words

In July 2023, Brandman surgically placed four tiny devices into the part of Harrell’s brain that controls speech. Each device has 64 metal prongs that help it detect electrical signals being relayed by neurons in his brain.

On paper, a recording of those signals looks like a series of waves.

After Harrell recovered from surgery, the researchers visited him at home. They attached a recording device to his head and turned on their BCI. It took the system a few minutes to learn how to decode Harrell’s brain signals as he tried to speak. Then, it began turning those brain signals into words.

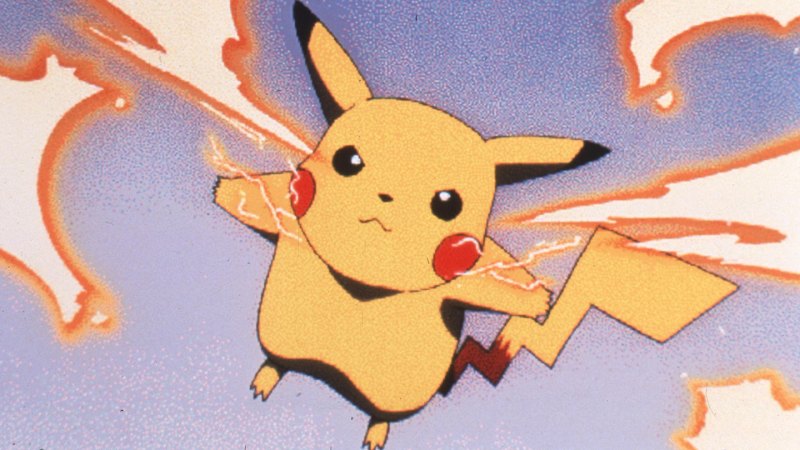

As we speak, our brain breaks down each word into small bites of sound. Each sound bite is known as a phoneme (FO-neem). For example, moose is made from three different sound bites: the “m,” the “oo” and the “ss.”

Each phoneme produces a unique electrical signal in the brain. It’s like a fingerprint. Math can tell the technology how to turn those signals into the actual words someone was trying to say. Then words appear as sentences on a computer screen and are “spoken” out loud by a computer’s speaker.

This image maps brainwaves emitted by neurons. Those waves correspond to electrical signals in the brain. Here, the map shows how the waves differ when Casey Harrell was thinking the words “newspaper” and “delivery.”UC Davis Health

On the second day of using the system, Harrell was able to speak with his young daughter for the first time that she could remember.

Until he used the new system, Harrell needed the assistance of a trained interpreter to communicate what he wanted to say. But that was difficult. He could only get across about six words per minute correctly, says Brandman. For context: The average person speaks about 120 to 150 words per minute.

Harrell can now have conversations using the technology to share his thoughts, needs and wants. He also can show his sense of humor. Today, he uses the tech up to 12 hours a day to talk with family, friends and coworkers. Harrell still works as a climate activist.

Casey Harrell communicates using the brain-computer interface for the first time.

Not the first brain-to-speech system

Brandman and Stavisky’s group is not the first to show such tech has promise. But they say theirs is the first that someone can use at home to communicate all day long, every day — without the need for on-scene technicians.

Other scientists have implanted similar devices into someone’s brain before. But those devices had half as many prongs to pick up brain signals. In tests, such implants proved accurate for three out of every four words, on average. Getting one fourth of all words wrong made communicating hard, says Brandman. Still, it did show the idea could work.

Brandman and Stavisky now use implants with twice as many prongs. More prongs means their devices can better “hear” nerve signals. The researchers also improved the math used by their BCI. Together these changes boosted the accuracy of its speech decoding.

Over 32 weeks, this tech correctly interpreted what Harrell wanted to say about 97 percent of the time. That means it got 49 out of every 50 words right, Brandman explains. And its accuracy is still improving.

Other research groups are also working on computerized devices to decode people’s brain signals into speech.

Chris Crawford is a computer scientist at the University of Alabama in Tuscaloosa. He directs its Human-Technology Interaction Lab. There, he creates computer programs to run wearable BCIs. People who are paralyzed by disease and injury “live with these impairments every day,” he says. That’s why these systems are so important, he says. They can potentially help people regain functions they once thought were forever lost.

Like Crawford, Roya Salehzadeh did not work on the BrainGate technology. But she says research like this could help engineers such as herself. At Lawrence Technological University in Southfield, Mich., she works on BCIs and human-robot interactions. BCIs, she says, could help someone drive their wheelchair, just by using information that comes from their brain. Or, she notes, BCIs could detect emotions or stress in people who can’t communicate.

How long until these systems become widely available? Brandman thinks doctors may be able to prescribe a BCI like the one Harrell uses within the next five to 10 years.

This is one in a series presenting news on technology and innovation, made possible with generous support from the Lemelson Foundation.